This set of Neural Networks Multiple Choice Questions & Answers (MCQs) focuses on “Backpropagation Algorithm – Set 2″.

1. Backpropagation is capable of handling large learning problems.

a) True

b) False

View Answer

Explanation: Multilayered networks are capable of computing a wider range of Boolean functions than networks with a single layer of computing units. Backpropagation is the essence of Multilayered networks. So, backpropagation is capable of handling such large learning problems.

2. One of the more popular activation functions for back propagation networks is the sigmoid.

a) True

b) False

View Answer

Explanation: One of the more popular activation functions for back propagation networks is the sigmoid, a real function Sc: ℝ → (0, 1) defined by the expression Sc (x) = \(\frac {1}{1+ e^{-cx}}\). The constant c can be selected arbitrarily and its reciprocal 1/c is called the temperature parameter in stochastic neural networks. Shape of the sigmoid changes according to the value of c.

4. A differentiable activation function makes the function computed by a neural network differentiable and the back propagation algorithm is applicable to it.

a) True

b) False

View Answer

Explanation: A differentiable activation function makes the function computed by a neural network differentiable (assuming that the integration function at each node is just the sum of the inputs), since the network itself computes only the function compositions. And the error function also becomes differentiable.

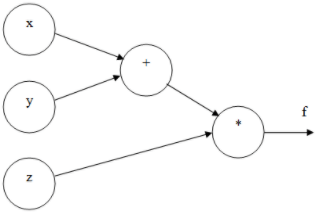

5. What will be the final value of f(x, y, z) from the below figure given that x = -5, y = 7, and z = 14?

a) 28

b) -105

c) 16

d) -133

View Answer

Explanation: From the figure f(x, y, z) = (x + y) * z. Given that x = -5, y = 7, and z = 14.

f(x, y, z) = (x + y) * z

= (-5 + 7) * 14

= 2 * 14

= 28

6. Which of the following is not a step in backpropagation algorithm?

a) Feed-forward computation

b) Backpropagation to the output layer

c) Backpropagation to the input layer

d) Backpropagation to the hidden layer

View Answer

Explanation: After choosing the weights of the network randomly, the backpropagation algorithm is used to compute the necessary corrections. The backpropagation algorithm can be decomposed in the following four steps: Feed-forward computation, Backpropagation to the output layer, Backpropagation to the hidden layer and Weight updates. So it doesn’t contain a backpropagation to the input layers.

7. Which of the following statements is not true about backpropagation?

a) Initially the weights are assigned at random

b) The algorithm iterates through many cycles of two processes until a stopping criterion has reached

c) Each epoch includes a forward phase and a backward phase

d) The whole cycles of execution together are known as an epoch

View Answer

Explanation: Initially the weights are assigned at random. Then the algorithm iterates through many cycles of two processes until a stopping criterion is reached. Each cycle is known as an epoch and not the whole cycle together. Each epoch includes a forward phase and a backward phase.

8. Which of the following statements is not true about forward phase in backpropagation in neural network?

a) The neurons are activated in sequence from the input layer to the output layer

b) Applying each neuron’s weights and activation function from the input layer to the output layer

c) Applying each neuron’s weights and activation function from the output layer to the input layer

d) Upon reaching the final layer, an output signal is produced

View Answer

Explanation: A forward phase is one of the two phases in backpropagation in neural network. Here the neurons are activated in sequence from the input layer to the output layer, applying each neuron’s weights and activation function from the input layer to the output layer. Upon reaching the final layer, an output signal is produced.

9. Which of the following statements is not true about backward phase in backpropagation in neural network?

a) The network’s output signal resulting from the forward phase is compared to the true target value in the training data

b) The difference between the networks’s output signal and the true value results in an error

c) The error is propagated backwards in the network to modify the connection weights between neurons

d) Modifying the weights cannot reduce the future errors

View Answer

Explanation: In backward phase in backpropagation in neural network he network’s output signal resulting from the forward phase is compared to the true target value in the training data. The difference between the networks’s output signal and the true value results in an error that is propagated backwards in the network to modify the connection weights between neurons and reduce future errors.

10. Which of the following statements is not true about the backpropagation architecture?

a) Backpropogation is a multilayer feed forward network with one layer of z-hidden units

b) The input layer is connected to hidden layer by means of interconnecction weights

c) The hidden layer is connected to output layer by means of interconnecction weights

d) Only the hidden units can have bias

View Answer

Explanation: Both hidden and output layers can have the bias in backpropagation architecture. Bias term is required; a bias value allows you to shift the activation function to the left or right. The weights used in bias term will be changed in backpropagation algorithm and will be optimized using gradient descent or advanced optimization technique.

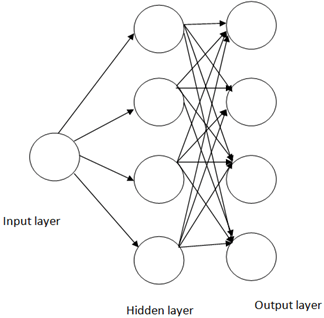

11. The below figure shows a feed forward network.

a) False

b) True

View Answer

Explanation: A feedforward neural network is an artificial neural network where the connections between the nodes do not form a cycle. Here the network activation flows only in one direction from the input layer to the output layer, passing through the hidden layer. Each unit in a layer is connected in the forward direction to every unit in the next layer.

12. Which of the following statements is not true about the backpropagation architecture?

a) It resembles a multi layered feed forward network

b) The increasing number of hidden layers results in the computational complexity of the network

c) The increasing number of hidden layers may results in the time taken for minimizing the error as low

d) The increasing number of hidden layers may results in the time taken for convergence as very high

View Answer

Explanation: The backpropagation architecture resembles a multi layered feed forward network. The increasing number of hidden layers results in the computational complexity of the network. And as a result time taken for convergence and to minimize the error may be very high.

13. Backpropagation is a short form for backward propagation of errors.

a) True

b) False

View Answer

Explanation: Backpropagation is a short form for backward propagation of errors. It is a standard method of training artificial neural networks. This method helps to calculate the gradient of a loss function with respects to all the weights in the network.

14. Which of the following is not a feature of back propagation algorithm?

a) It is fast, simple and easy to program

b) It does not need any special mention of the features of the function to be learned

c) It has more parameters to tune apart from the numbers of input

d) It is a flexible method as it does not require prior knowledge about the network

View Answer

Explanation: Backpropagation is fast, simple and easy to program. It has no parameters to tune apart from the numbers of input. And it is a flexible method as it does not require prior knowledge about the network. It does not need any special mention of the features of the function to be learned.

15. Which of the following statements is not true about the backpropagation?

a) Static backpropagation produces a mapping of a static input for static output

b) Static backpropagation is useful to solve static classification issues like optical character recognition

c) Recurrent backpropagation is fed forward until a fixed value is achieved and after that, the error is computed and propagated backward

d) The mapping is static in both static back-propagation and recurrent backpropagation

View Answer

Explanation: The two types of backpropagation networks are static backpropagation and recurrent backpropagation. The difference between static and recurrent methods is that the mapping is rapid in static backpropagation and it is non-static in recurrent backpropagation. All other statements are true about static backpropagation and recurrent backpropagation.

Sanfoundry Global Education & Learning Series – Neural Networks.

To practice all areas of Neural Networks, here is complete set on 1000+ Multiple Choice Questions and Answers.

If you find a mistake in question / option / answer, kindly take a screenshot and email to [email protected]