This set of Machine Learning Multiple Choice Questions & Answers (MCQs) focuses on “Ensemble Learning – Stacking”.

1. Stacked generalization extends voting.

a) True

b) False

View Answer

Explanation: Voting is the simplest way to combine multiple classifiers, which corresponds to taking a linear combination of the learners. Stacked generalization extends voting by combining the base learners through a combiner, which is another learner.

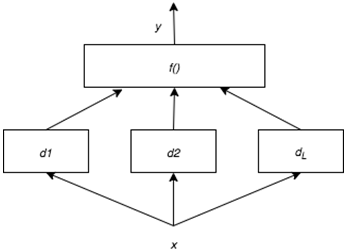

2. Which of the following is represented by the below figure?

a) Bagging

b) Boosting

c) Mixture of Experts

d) Stacking

View Answer

Explanation: Stacking is a technique in which the outputs of the base-learners are combined and is learned through a combiner system, f(•|Φ). Where f(•|Φ) is another learner, whose parameters Φ are also trained as: y = f (d1, d2, …, dL|Φ) where d1, d2, …, dL are the base learners.

3. Which of the following is the main function of stacking?

a) Ensemble meta algorithm for reducing variance

b) Ensemble meta algorithm for reducing bias

c) Ensemble meta algorithms for improving predictions

d) Ensemble meta algorithms for increasing bias and variance

View Answer

Explanation: Stacking is a way of combining multiple models. And it uses predictions of multiple models as “features” to train a new model and use the new model to make predictions on test data. So it ensemble meta algorithms for improving predictions.

4. Which of the following is an example of stacking?

a) AdaBoost

b) Random Forest

c) Bagged Decision Trees

d) Voting Classifier

View Answer

Explanation: Voting classifiers (ensemble or majority voting classifiers) are an example of stacking. They are used to combine several classifiers to create the final classifier. AdaBoost is a boosting technique whereas random forest and bagged decision trees are examples of bagging.

5. The fundamental difference between voting and stacking is how the final aggregation is done.

a) True

b) False

View Answer

Explanation: The fundamental difference between voting and stacking is how the final aggregation is done. In voting, user-specified weights are used to combine the classifiers whereas stacking performs this aggregation by using a linear or nonlinear function. And this function can be a blender/meta classifier.

6. Which of the following statements is false about stacking?

a) It introduces the concept of a meta learner

b) It combines multiple classification or regression models

c) The combiner function can be nonlinear

d) Stacking ensembles are always homogeneous

View Answer

Explanation: Stacking ensembles are not always homogeneous but are often heterogeneous, because the base level often consists of different learning algorithms. It combines multiple classification or regression models. And it introduces the concept of a meta learner and this combiner function can be nonlinear unlike voting.

7. Stacking trains a meta-learner to combine the individual learners.

a) True

b) False

View Answer

Explanation: Stacking trains a meta-learner (second-level learner) to combine the individual learners (first-level learners). In stacking the first-level learners are often generated by different learning algorithms. And the second-level learner learned from examples for combining multiple classifiers or first-level learners.

8. Associative switch can be used to combine multiple classifiers in stacking.

a) True

b) False

View Answer

Explanation: Associative switch also known as the meta-learner in the stacking. And it is used to learn from examples for combining multiple classifiers. And it is also known as combining by learning.

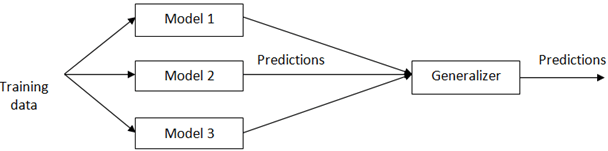

9. Which of the following is represented by the below figure?

a) Stacking

b) Mixture of Experts

c) Bagging

d) Boosting

View Answer

Explanation: Stacking introduces a level-1 algorithm, called meta-learner, for learning the weights of the level-0 predictors. That means the predictions of each training instance from the models become now training data for the level-1 learner (generalizer).

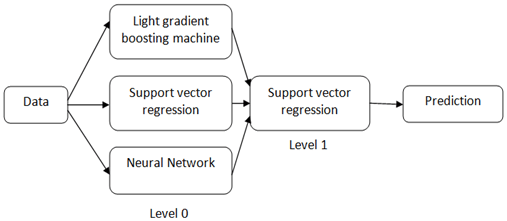

10. What does the given figure indicate?

a) Stacking

b) Support vector machine

c) Bagging

d) Boosting

View Answer

Explanation: The given figure shows a stacked generalization framework. It consists of level 0 (three models) and level 1 (one Meta model). And these individual algorithms (Light gradient boosting, Support vector regression and neural network) improve the predictive performance.

Sanfoundry Global Education & Learning Series – Machine Learning.

To practice all areas of Machine Learning, here is complete set of 1000+ Multiple Choice Questions and Answers.

If you find a mistake in question / option / answer, kindly take a screenshot and email to [email protected]